One of the things that we constantly evaluate when we outline the steps to follow in BARO is how the ecosystem of autonomous vehicles around us is evolving and what the correct times are to introduce the products to the market, based on social adaptation to new autonomous technologies.

Small steps

Looking back and reviewing what we have been saying at BARO from the beginning about implementing small steps towards putting an autonomous vehicle on the streets, we see how technologies for autonomous vehicles are gradually evolving. We found out, for example, that the European Community law that promotes the implementation of object recognition technologies so that the car can see traffic signs or recognize a road user is finally being applied to standard vehicles. Beginning in 2022, all vehicles that go on the European market must include Automated Emergency Braking that can detect cyclists and pedestrians, as well as Intelligent Speed Assistance that recognizes traffic signs and automatically adjusts the speed of the vehicle. Indeed, we have seen these autonomous vehicle functionalities slowly being added to new cars. The change is gradual, and we have not yet reached a point where the user can say, “We are in the presence of an intelligent car.” Users do not necessarily recognize the insertion of new autonomous technologies; we are far from the Level 5 of autonomy that many companies speak of in advertisements.

The hybrid environment dilemma

Once more, we reinforce our thinking that a hybrid environment is not ideal; cars driven by people and cars driven by computers are not 100% compatible. This is one of the main challenges ahead. In recent months, Amazon has reported more accidents in its facilities with robots and people working simultaneously than in environments with only people or environments with only robots; specifically, accidents in hybrid environments increased.

Why is this happening? Because a robot cannot correctly predict human behavior. We humans have learned to speculate about what other people will do. Many elements in the human body suggest or indicate what a person is going to do, and one can only detect these elements through observation and reading non-verbal cues. When you look at the hands, the face, and the position of the body, you can predict how a person is going to behave; if he is going to go forward, if he is going to back up, if he is going to slow down, if he is not going to stop. We can observe the movement of the hands, which may be gesturing for you to pass by. The position of the feet may indicate that the person will remain still and not cross the road. However, all of these small human actions cannot be 100% interpreted by a computer using the systems currently in existence.

Why can’t the computer interpret this information? Because it requires more computing power and more computing speed than the computers we currently have in vehicles possess. Therefore, many variables cannot yet be executed by the computer. We are on a gradual path to improving environmental knowledge in computers.

Meanwhile, simulators have begun to appear in large numbers. We have simulators of real environments, simulators of semi-realistic environments with traffic signs drawn on screens placed on the sides and figures that pretend to be pedestrians, rain simulators, snow simulators, and more. We receive at least two or three proposals per day on social networks and through emails from companies that want to sell us synthetic data. By synthetic data, I mean computer-created data based on virtual environments; many also sell city simulations, which are representations of how cities move.

When I started to examine these simulations and how they presented all these data, I found out that most of the models did not represent real situations; they were ideal environments. I did not spend a lot of time on them. If someone reading can tell me otherwise, they are welcome to; in my experience, however, these are fictional scenarios. In England, for example, we have a number of small logistics companies that operate home delivery vans and park on the sidewalk. I did not see a vehicle parked on the sidewalk in any of the simulations. I did not see any vehicles enter a prohibited zone, nor did I see any vehicles parked as they would be in Milan, where they park 45 ° above the sidewalk and street simultaneously.

Thus, under these circumstances, we must consider: how is a driverless car trained with ideal models going to understand these real-life conditions? How can the system predict that the vehicle in front will signal, place a beacon, and brake in a legally prohibited place, and possibly be in the middle of the street with two wheels on the street and two wheels on the sidewalk? Where are the simulations showing these situations? I have not found them, and that is the main problem that we have to solve.

In addition, we must analyze how we can continue to feed the artificial intelligence models that we already use. At BARO, we aim to show the public, our potential clients, that the most important thing is the way the systems are trained. That is why we introduced our LITA Copilot.

LITA Copilot is a technological gadget that can be used in any vehicle. It is a system that applies characteristics of autonomous vehicles to help drivers to drive better. For instance, it can assist them when they have reduced vision during bad weather conditions, such as in rain or snow, or when they are distracted. The reality is that accidents do happen, and autonomous technology will help reduce those accidents. Consider this scenario: a person driving a logistics truck is in a hurry; he is marking on a sheet that he has completed a delivery. In addition, he is tired, and he is receiving a phone call. From the height of the driver’s seat, he may not see there is a boy on a bicycle that has just crossed in front of his truck. LITA Copilot is a gadget that will warn you of these types of situations: if there is a moving obstacle around you, if you are exceeding the speed limit according to the traffic signs, if there is an obstacle in front of you, etc.

At BARO, we understand that we can collaborate to reduce accidents today, offering a technological gadget with the driving assistance features that newer vehicles have. This would be valuable for current drivers; at the same time, we can use that same device to improve our data models, looking to the future.

It seems to me that Tesla is developing an attractive option. The company has assembled a supercomputer to analyse data collected from each Tesla vehicle in every city on the planet. Elon Musk has a progressive view on the problem we find ourselves in. Musk says that autonomous driving is challenging and difficult to perfect. Therefore, he has programmed his vehicles to collect information about what is happening around them and has set up a network training computer neuronal, the Dojo, to process large amounts of video data.

LITA Copilot, the next step in the autonomous systems challenge

At BARO, we currently offer a device that can be installed in buses, trucks, and logistics service vehicles. It is also useful for young people who like technology but drive small vehicles and for older people who drive larger vehicles. With LITA Copilot, we are opening a different panorama for data analysis. We focus on different human behaviors that will allow us to understand the real world much better, with a different vision than that of a Tesla driver; more importantly, we have a much more realistic view than a simulated system.

This is our vision for the future at BARO Vehicles. When we say that we are building a computer to be installed on the windshield of a car, some people say, “But you do not build vehicles.” However, I like to think of this as being like when Tesla bought the solar panel company. This was a far-fetched idea for many investors. Why buy a solar panel company? It turns out that the Tesla company was thinking about electrification and how to make a sustainable product. They recognized that we cannot continue to use fossil fuels to generate the electricity needed to charge the battery of a Tesla; therefore, they came up with the idea to generate energy through solar panels to charge their batteries sustainably.

The concept here is the same. We are doing A to achieve B; we are going through the process step-by-step. We are working on the construction of the only part of the vehicle that does not exist on the market today. The central computer is not like any mechanical part of the vehicle. It should not be analyzed as a mechanical part, because it is the brain of the vehicle. At this time, we are more focused on seeing how we replace the drivers than on the vehicle itself. Replacing the driver means putting the correct data on the computer. In this way, we can see with the experience of a human being who has learned to drive, who has developed an understanding over the years of how the world works and how each object exists in space. That is the goal we are setting for ourselves at the moment. To achieve this, we want to install 100,000 LITA Copilots around the planet. This will enable us to gather the volume of data necessary to be able to clearly understand the real world, develop a clear and concrete data model on how they work on the streets in the real world, and continue our mission of “building the bridge to autonomous vehicles”.

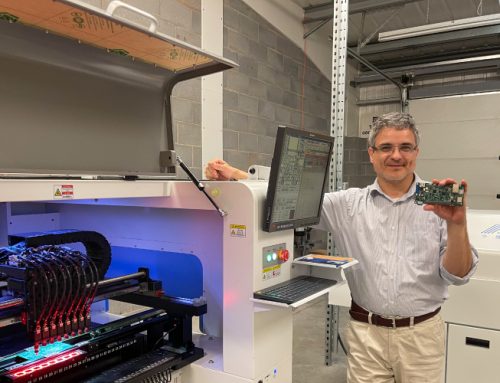

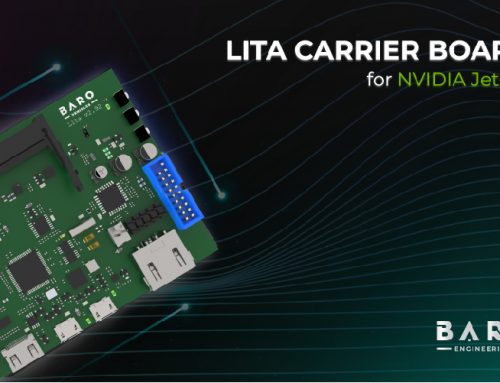

Today BARO turns six years old and we celebrate with the manufacture of the new version of our LITA Carrier Board for NVIDIA Jetson processors, our artificial intelligence computer up to four cameras, the next step in the autonomous systems challenge is the LITA Copilot, we are moving to help in the race towards the vision zero fatalities reducing traffic accidents, we are moving into the autonomous era.